We hear the need that organizations want to keep their data and AI models on-premises for security, compliance, or cost reasons. To address this need, we are excited to announce the support for locally hosted AI models within our platform.

This is the case especially for organizations which add their own data as well to our analytics landscape, and do not want to share it with third-party cloud AI providers.

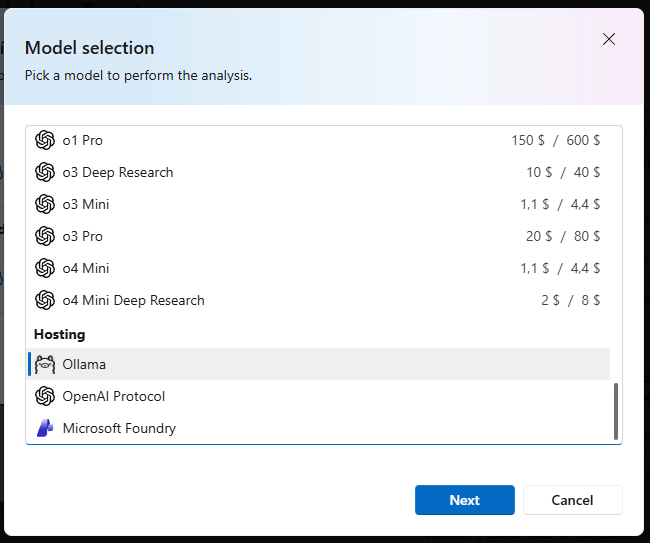

Locally hosted AI models are now available in the model pickerWe aim to cover a wide range of solutions to match the diverse needs of our customers:

- To cover a wide range of open source models, we added support forOllama, one of the most popular local AI model hosting solutions. With Ollama integration, users can easily connect their locally hosted models to our platform.

- These models can be hosted in various environments, including NVIDIA's recently announcedDGX Sparkhardware.

- Besides Ollama, anyOpenAI Protocolcompatible local model hosting solution can be integrated with our platform using standard API protocols.

- For customers who prefer a balanced approach, we added support forMicrosoft Foundry, which enables customers to host AI models in their own customizable, compliant, and scalable cloud environment.

Models hosted these ways can be seamlessly integrated into various parts of our platform to perform any text analysis task, including Documents, Social Analytics, Alerts, Dashboards, and Surveys.

Usage of these models are not billed twice in our system, customers are only charged for the product features within our platform.

Peter

Chief Technology Officer